TensorFlow on M105 Jan 2022 / 6 minutes to read Elena Daehnhardt |

|

Introduction

TensorFlow is a free OS library for machine learning created by Google Brain. Tensorflow has excellent functionality for building deep neural networks. I have chosen TensorFlow because it is pretty robust, efficient, and can be used with Python. Since I like Jupyter Notebooks and Conda, they were also installed on my system. Next, I am going through simple steps to install TensorFlow and the packages above on M1 macOS Monterey.

XCode

I had a new computer; thus, I started downloading and installing XCode from App Store.

Homebrew

We can download Homebrew from https://brew.sh or by running the command:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

Miniforge

When doing data science, I usually use Anaconda for managing libraries. This is why I have installed Miniforge to access Conda by downloading the Miniforge3-MacOSX-arm64 from Minifororge Releases. After installation, this required running a bash file and adding the Miniforge to the system path (when it prompts ‘yes|no’ - say ‘yes’ to add Anaconda to the PATH).

cd ~/Downloads

/bin/bash Miniforge3-MacOSX-arm64.sh

echo $PATH

Tensorflow and Jupyter

Next, we create a new environment in Conda and install Tensorflow base and tensorflow-metal plugin:

# Let’s create a new environment, called tensorflow_m1:

conda create --name tensorflow_m1 python==3.9

# Activate

conda activate tensorflow_m1

# Then, install dependencies

conda install -c apple tensorflow-deps

# Install the core and metal plugin for TensorFlow with pip

pip install tensorflow-macos

pip install tensorflow-metal

Additionally, we can install the Jupyter notebook lab, matplotlib, pandas and scikit-learn:

conda install -c conda-forge jupyter jupyterlab

conda install matplotlib

conds install pandas

conda install scikit-learn

Test

Since macOS M1 has GPU support, I am going to test the TensorFlow running on GPU and CPU devices. For this, I used the MNIST digits classification dataset for training a Sequential neural network model.

# Importing libraries

import pandas as pd

import numpy as np

import tensorflow as tf

import keras

# Importing required functionality

from keras.models import Sequential

from keras.layers import Flatten, Dense, Dropout, Dense

# Loading and preparing data

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

Notice, when we create the Sequential model, we use the Flatten layer with the input shape of 28x28 because initially, MNIST dataset contained digit images in this format. The network has one Dense layer of 128 neurons with ReLU activation function, one Dropout layer to deal with overfitting, and a Dense output layer with 10 neurons.

# Creating sequencial model

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation='relu'),

Dropout(0.2),

Dense(10),])

# Loss function

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

# Compile the model with the defined loss function, Adam optimiser and accuracy metric

model.compile(optimizer='adam', loss=loss_fn, metrics=['accuracy'])

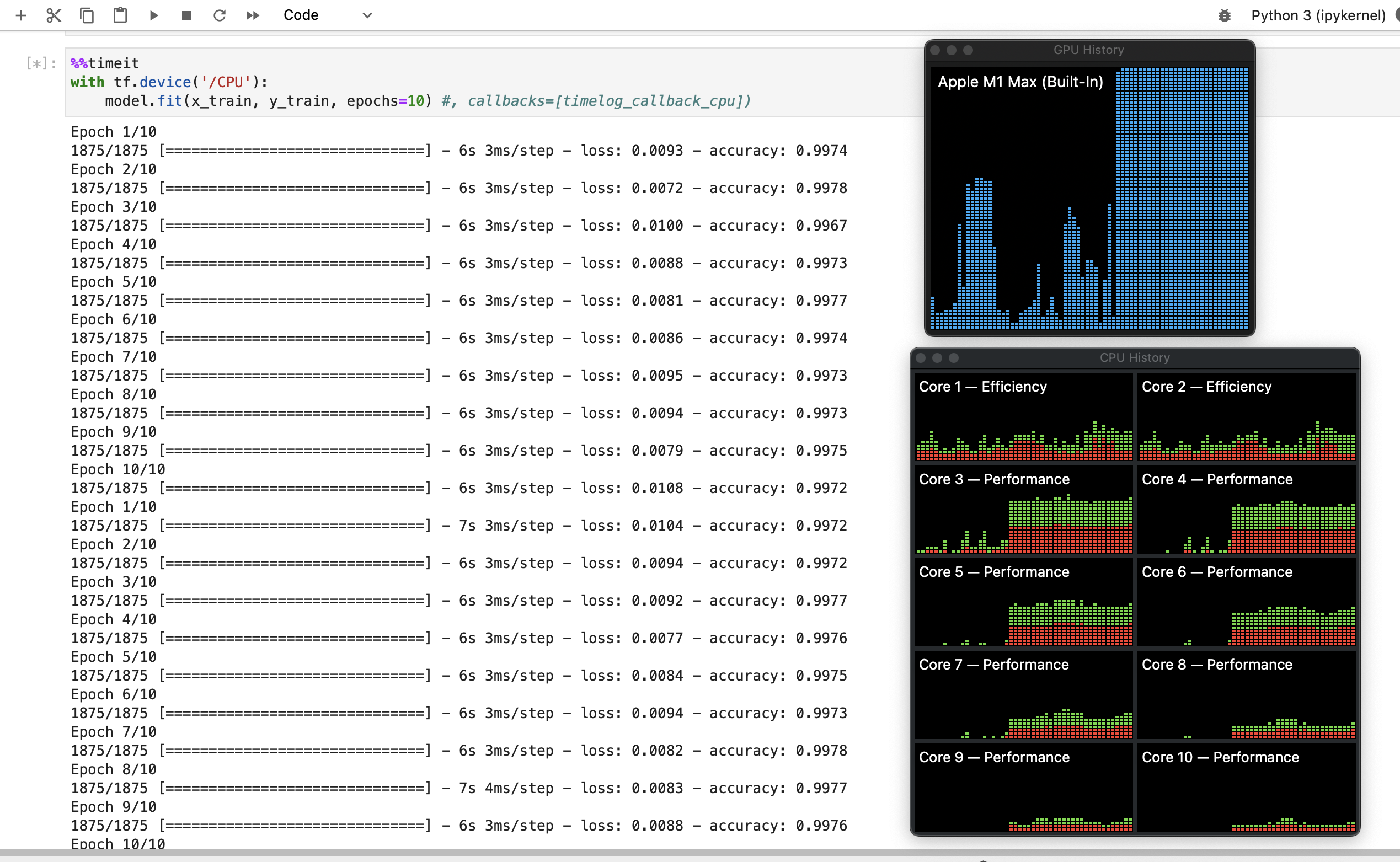

I run my code in a Jupyter Notebook, so I use cell timing with the magic function “%%timeit”

%%timeit

with tf.device('/CPU'):

model.fit(x_train, y_train, epochs=10)

58.6 s ± 656 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

%%timeit

with tf.device('/GPU'):

model.fit(x_train, y_train, epochs=10)

1min 24s ± 2.06 s per loop (mean ± std. dev. of 7 runs, 1 loop each)

Interestingly, training on a GPU device resulted in much higher time. When I looked up in Activity Monitor, GPU History, I have observed that GPU is 100% used when training on both devices. I think that there is some overhead issue with the GPU option, which I will talk about with an Apple expert.

Conclusion

To sum up, we have installed Xcode, Homebrew, Miniforge, and finally, Tensorflow on a macOS M1 computer. To check that everything works well, we created a machine learning model and assessed its performance with the MNIST dataset.

Did you like this post? Please let me know if you have any comments or suggestions.

Posts that might be interesting for you

|

About Elena Elena, a PhD in Computer Science, simplifies AI concepts and helps you use machine learning.

|