Feature preprocessing29 Jan 2022 / 15 minutes to read Elena Daehnhardt |

|

Introduction

When we have mixed datasets with different feature types, we want to prepare data for feeding into a Machine Learning algorithm. This happens when we have different inputs (also called features or covariates), such as categories such as gender or geographic region. Other features can be on a different scale, for instance, a person’s weight or height.

First of all, the Machine Learning algorithm requires that data is in a specific type. For instance, we can use only numerical data. In other cases, ML algorithms would perform better or converge faster when we preprocess data before training the model. Since we do this step before training the model, we call it preprocessing. In this article, we will focus on two main methods of feature preprocessing, including feature scaling (or normalisation) and feature standardization.

Data Exploration

To decide what we do with the data and apply Machine Learning to it, we need to analyse the dataset. We want to determine what features we have, whether they are helpful for our ML goals, how clean the dataset is, the presence of missing or noisy data. Quite often, we need also to perform data cleaning or wrangling.

It is pretty useful to visualise features, create tables, remove irrelevant features, or change the input values in different data types. To start playing with data, we first download our dataset provided by Kaggle, we use Pandas Python library for downloading data directly from GitHub:

# Importing libraries further used in code

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

insurance = pd.read_csv("https://raw.githubusercontent.com/stedy/Machine-Learning-with-R-datasets/master/insurance.csv")

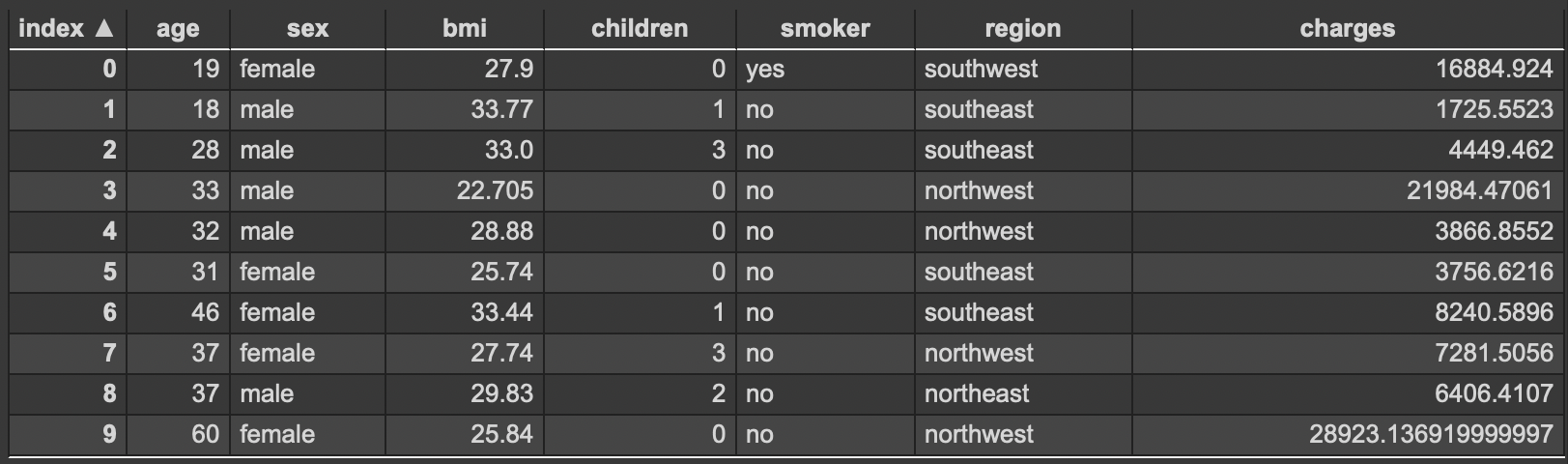

insurance.head(10)

The table shows the first ten rows of the insurance charges dataset we just downloaded. Our main goal is to predict medical insurance charges having a person’s age, sex, BMI, number of children, geographic region, and smoking status.

We can use Pandas functions such as info() and describe() to explore our features more in-depth. The info() function prints out a summary of the data with column names data types and finds any missing values.

insurance.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 1338 entries, 0 to 1337 Data columns (total 7 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 age 1338 non-null int64 1 sex 1338 non-null object 2 bmi 1338 non-null float64 3 children 1338 non-null int64 4 smoker 1338 non-null object 5 region 1338 non-null object 6 charges 1338 non-null float64 dtypes: float64(2), int64(2), object(3) memory usage: 73.3+ KB

Should we require to change any data type, we use astype() function. For instance, we want to change the age and number of children from int64 (default type assigned when we downloaded the dataset) into the int8, which will need less memory size for storage. If you are interested in playing with different data types, read the “Overview of Pandas Data Types” by Chris Moffitt.

insurance['age']= insurance['age'].astype('int8')

insurance['children']= insurance['children'].astype('int8')

When we run the info() function once again, we can see that we saved already 18KB ad=fter changing to int8.

insurance.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 1338 entries, 0 to 1337 Data columns (total 7 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 age 1338 non-null int8 1 sex 1338 non-null object 2 bmi 1338 non-null float64 3 children 1338 non-null int8 4 smoker 1338 non-null object 5 region 1338 non-null object 6 charges 1338 non-null float64 dtypes: float64(2), int8(2), object(3) memory usage: 55.0+ KB

The describe function is helpful for numerical features to get their statistical characteristics such as counts, mean, standard deviation.

insurance.info()

| index | age | bmi | children | charges |

|---|---|---|---|---|

| count | 1338.0 | 1338.0 | 1338.0 | 1338.0 |

| mean | 39.20702541106129 | 30.663396860986538 | 1.0949177877429 | 13270.422265141257 |

| std | 14.049960379216172 | 6.098186911679017 | 1.2054927397819095 | 12110.011236693994 |

| min | 18.0 | 15.96 | 0.0 | 1121.8739 |

| 25% | 27.0 | 26.29625 | 0.0 | 4740.28715 |

| 50% | 39.0 | 30.4 | 1.0 | 9382.033 |

| 75% | 51.0 | 34.69375 | 2.0 | 16639.912515 |

| max | 64.0 | 53.13 | 5.0 | 63770.42801 |

We can do further data analysis with a grouping function to observe that in average, there are larger mdeical insurance charges for smokers.

insurance.groupby("smoker")["charges"].mean()

smoker no 8434.268298 yes 32050.231832 Name: charges, dtype: float64

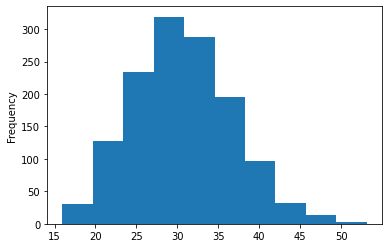

We can also draw plots with Pandas. For instance, we can plot a histogram of the “bmi” feature.

insurance["bmi"].plot(kind="hist")

Since the data exploration is not our main topic, you can read more about it at “Data Exploration 101 with Pandas” by Günter Röhrich. On possible tools to automate the data exploration, read the article by Abdishakur “4 Tools to Speed Up Exploratory Data Analysis (EDA) in Python”.

Data Preprocessing

To prepare our data for use in ML, we preprocess the data for a better machine-readable form. For instance, we can convert string categories into numerical features, change numerical features to standard scales with normalisation or perform data standardisation.

When we do normalization, we change data to a common scale (from 0 to 1). In sklearn, we have feature scaling MinMaxScaler. In standardisation, we remove the mean and divide each value by the standard deviation, implemented in sklearn with StandardScaler. Both methods can lead to better performance or faster convergence of ML. For instance, Linear and logistic regression, nearest neighbors, and neural networks can benefit from feature scaling. However, while some algorithms work better with some methods, such as, neural networks work can work better with normalisation , it is good to experiment with both methods to see which method is the best for your purposes, whtehre it is a training speed or in terms of your algorithm performance such as accuracy or errors.

Transforming Features

Let’s go into an example of Transforming Features with MinMaxScaler and OneHotEncoder (sklearn) for our insurance charges dataset. We can try out OneHot Encoder, and MinMaxScaler provided by sklearn. Both methods can be combined in the preprocessing step with make_column_transformer(). It is essential to mention that we fit training data to the column transformer, which we further use with testing data. We need to ensure that data leakage won’t happen. Read about data leakage in “How to Avoid Data Leakage When Performing Data Preparation” by Jason Brownlee.

from sklearn.compose import make_column_transformer

from sklearn.preprocessing import MinMaxScaler, OneHotEncoder

from sklearn.model_selection import train_test_split

# Create column transformer for feature preprocessing

column_transformer = make_column_transformer(

(MinMaxScaler(), ["age", "bmi", "children"]),

(OneHotEncoder(handle_unknown="ignore"), ["sex", "smoker", "region"])

)

# Create X and y

X = insurance.drop("charges", axis=1)

y = insurance["charges"]

# Build our train and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2,

random_state=57)

# Fit the column transformer to our training data

column_transformer.fit(X_train)

# Transform training and test data with normalisation (MinMaxScaler)

# and OneHotEncoder

X_train_normalised = column_transformer.transform(X_train)

X_test_normalised = column_transformer.transform(X_test)

# Normalised features example

X_train_normalised[0]

array([0.02173913, 0.34217191, 0. , 1. , 0. ,

0. , 1. , 0. , 0. , 0. ,

1. ])

Creating and Evaluating a Neural Network

We build a neural network to fit the normalised data with the preprocessed data. We use Keras Sequential model with two hidden layers. As a result of model evaluation on the test set, we get MAE=1697.4192.

# Build a neural network to fit the normalised data

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(100, activation="relu"),

tf.keras.layers.Dense(10, activation="relu"),

tf.keras.layers.Dense(1)

])

model.compile(loss=tf.keras.losses.mae,

optimizer=tf.keras.optimizers.Adam(lr=0.1),

metrics=["mae"])

history = model.fit(X_train_normalised, y_train, epochs=100, verbose=0)

model.evaluate(X_test_normalised, y_test)

/usr/local/lib/python3.7/dist-packages/keras/optimizer_v2/adam.py:105: UserWarning: The `lr` argument is deprecated, use `learning_rate` instead. super(Adam, self).__init__(name, **kwargs) 9/9 [==============================] - 0s 2ms/step - loss: 1697.4192 - mae: 1697.4192 [1697.419189453125, 1697.419189453125]

Conclusion

In this post, we downloaded the insurance charges dataset from GitHub preprocessed the dataset with Pandas and sklearn libraries. Sklearn allowed us to combine One-hot encoding and feature scaling in one column-transforming step, which is done before building our ML model. We trained and evaluated a neural network model using Keras with the prepared data.

I am affiliated with and recommend the following fantastic books for learning Python and data wrangling and analysis skills.

Did you like this post? Please let me know if you have any comments or suggestions.

Python posts that might be interesting for youBibliography

I am thankful to the TensorFlow Developer Certificate in 2022: Zero to Mastery and following authors for the information used in preparing this post.

|

About Elena Elena, a PhD in Computer Science, simplifies AI concepts and helps you use machine learning.

|