Introduction

Recently, I received an email informing me about the awesome Kaggle competition launching ARC Prize 2024. What is so special about this competition?

ARC-AGI benchmark

The ARC-AGI benchmark (Abstraction and Reasoning Corpus for Artificial General Intelligence) stands out for several reasons:

-

Focus on Generalisation: Unlike many AI benchmarks that test performance on specific tasks, ARC-AGI emphasises the ability to generalise to novel problems. It assesses an AI system’s capacity to learn new skills and solve tasks it hasn’t been explicitly trained on.

-

Measures Fluid Intelligence: ARC-AGI aims to measure general fluid intelligence similar to what humans possess. This involves abstract reasoning, pattern recognition, and problem-solving abilities applied to unfamiliar situations.

-

Minimal Prior Knowledge: The tasks in ARC-AGI require minimal prior knowledge. They focus on core reasoning skills rather than relying on extensive domain-specific information.

-

Human-Level Performance: Humans generally score high on ARC-AGI tasks (around 85%), while current AI systems lag significantly behind. This indicates that ARC-AGI presents a challenging frontier for AI development.

-

Prize Competition: The ARC Prize, a $1,000,000+ competition, was launched to encourage researchers to develop AI systems that can beat the benchmark and potentially contribute to progress towards Artificial General Intelligence (AGI).

Is it a Puzzle game?

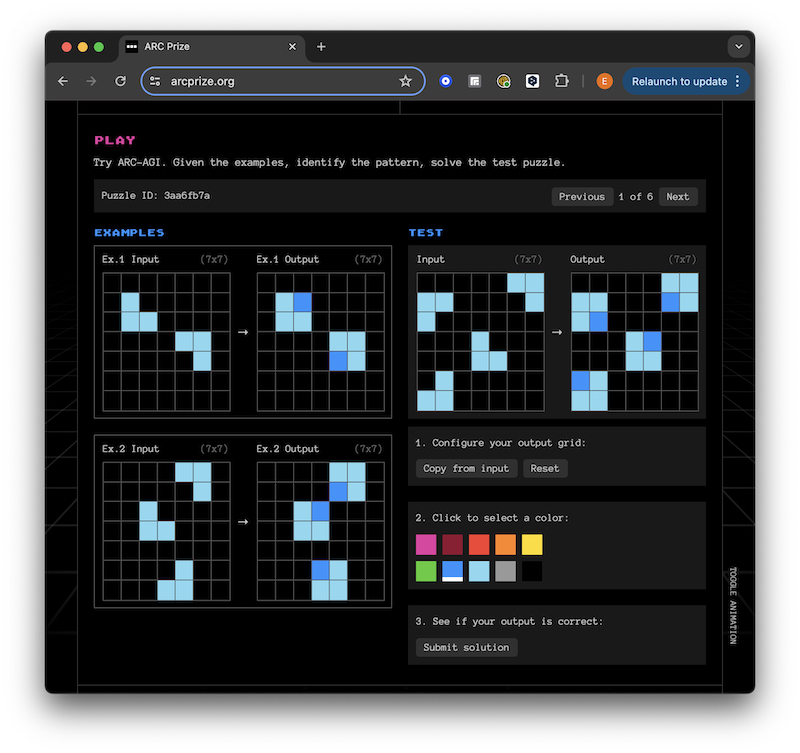

You can try to test your human or bot intelligence with the ARC Prize website, which is very easy for humans but difficult for AI:

The ARC task is solved

ARC-AGI itself is not a puzzle game in the traditional sense of entertainment. However, the tasks within the benchmark often resemble puzzles. They consist of input and output grids with visual patterns, and the goal is to figure out the rule or transformation that generates the output from the input.

The tasks require:

- Pattern recognition: Identifying the underlying relationships and rules within the visual patterns.

- Logical reasoning: Applying the identified rules to generate the correct output for new input patterns.

- Abstraction: Understanding the core concept or principle behind the pattern transformation rather than memorising specific examples.

These cognitive skills are similar to those used in solving puzzles, making the tasks feel like puzzles. However, the purpose of ARC-AGI is not entertainment but rather to assess and advance AI capabilities in abstract reasoning and generalisation.

So, while ARC-AGI is not a puzzle game per se, the nature of its tasks often evokes a similar problem-solving experience.

Why is it unique?

The ARC benchmark is defined in this GitHub repository (along wiith the dataset and testing interface):

ARC can be seen as a general artificial intelligence benchmark, as a program synthesis benchmark, or as a psychometric intelligence test. It is targeted at both humans and artificially intelligent systems that aim at emulating a human-like form of general fluid intelligence.

Overall, ARC-AGI is a unique and essential benchmark as it:

- Challenges Current AI Limitations: Highlights the gap between current AI capabilities and human-like general intelligence.

- Promotes Research on Generalisation: Encourages the development of AI systems that can learn and adapt to new tasks, a crucial step towards AGI.

- Offers a Standardised Measure: This measure provides a standardised way to assess progress in developing AI with general problem-solving abilities.

Prize

ARC’s grand prize of $500,000 is for teams achieving 85% accuracy on the test set. Anyone can join the competition. At this moment, MindsAI is leading the competition.

For further information, you can explore the following resources:

- ARC-AGI GitHub repository: https://github.com/fchollet/ARC-AGI

- ARC Prize website: https://arcprize.org/

- ARC Prize 2024 Kaggle competition: https://www.kaggle.com/competitions/arc-prize-2024

Discussion

I could not refrain from sharing my opinion since I can here :)

General intelligence is about a machine’s ability to learn and adapt. The competition is about AI that does not memorise but solves open-ended problems like humans. It is a very important step in achieving progress in AGI and improving AI’s ability to acquire skills and become more inventive as it evolves.

Would AGI be possible in the near future?

In my opinion, we could imitate human reasoning and teach machines to acquire new skills and become self-learners to a certain extent in the next five to ten years. Please subscribe to get updated on my future AI predictions on this blog :)

What do we still need for AGI to become a reality sooner? Some argue that AI’s power is correlated with the number of parameters and computational resources it uses. I agree that more parameters can allow for smarter AI networks. However, more parameters are not necessarily better. Consider the possibility of AI systems memorising the data by heart and “overfitting.” (read about machine-learning overfitting in my post Bias-Variance Challenge)

We really want AI systems to become “inventive” and intelligent. Should we develop human-like AGI, we have to do something more than merely ten or hundreds more times of parameters, such as to reach the level of human-brain neurons quantity.

To achieve real AI intelligence, we have to be outside the scope of digital representation. Why? Because we humans do not think discretely. Our neurons work with chemical reactions, allowing much more computational and non-stochastical power, and they can never be compared to AI, even the very smart one.

To create AGI above automation and content-generation, such as in LLMs, we must develop non-discrete systems, which we call “reinventing the wheel”. Should we instead work on improving natural intelligence first? Would it be a good potential in it?

It is also nice to start learning about the human brain more in depth to understand how intelligence and creativity are developing. Only when we can achieve a true understanding of what really makes intelligence and how it works in nature can we start modeling it and possibly improving it in AI.

Conclusion

I hope this explanation sheds light on the significance of the ARC-AGI benchmark! Will you join the ARC competition?

Try the following fantastic AI-powered applications.

I am affiliated with some of them (to support my blogging at no cost to you). I have also tried these apps myself, and I liked them.

Chatbase provides AI chatbots integration into websites.

Flot.AI assists in writing, improving, paraphrasing, summarizing, explaining, and translating your text.

CustomGPT.AI is a very accurate Retrieval-Augmented Generation tool that provides accurate answers using the latest ChatGPT to tackle the AI hallucination problem.

MindStudio.AI builds custom AI applications and automations without coding. Use the latest models from OpenAI, Anthropic, Google, Mistral, Meta, and more.

Originality.AI is very effecient plagiarism and AI content detection tool.

Did you like this post? Please let me know if you have any comments or suggestions.

Posts about AI that might be interesting for youReferences

2. Abstraction and Reasoning Corpus for Artificial General Intelligence (ARC-AGI)