Audio Signal Processing with Python's Librosa05 Mar 2023 / 52 minutes to read Elena Daehnhardt |

|

If you click an affiliate link and subsequently make a purchase, I will earn a small commission at no additional cost (you pay nothing extra). This is important for promoting tools I like and supporting my blogging.

I thoroughly check the affiliated products' functionality and use them myself to ensure high-quality content for my readers. Thank you very much for motivating me to write.

Introduction

Are you ready to dive into the fascinating world of audio processing with Python? Recently, a colleague sparked my interest in music-retrieval applications and the use of Python for audio processing tasks. As a result, I’ve put together an introductory post that will leave you awestruck with the power of Python’s Librosa library for extracting wave features commonly used in research and application tasks such as gender prediction, music genre prediction, and voice identification. But before tackling these complex tasks, we need to understand the basics of signal processing and how they relate to working with WAV files. So, buckle up and get ready to explore the ins and outs of spectral features and their extraction - an exciting journey you won’t want to miss!

Audio storage and processing

What is an audio signal?

An audio signal is a representation of sound waves in the air. These sound waves are captured by a microphone and converted into an electrical signal, which can then be stored and manipulated digitally.

To store an audio signal digitally, the analogue electrical signal is first sampled at regular intervals, typically at 44,100 samples per second for CD-quality audio. Each sample is represented as a binary number with a certain bit depth, such as 16 bits. The higher the bit depth, the more accurately the analogue signal’s amplitude can be represented.

The binary numbers are then stored in a digital audio file format like WAV or MP3. The audio signal is typically compressed in these formats to reduce file size while maintaining acceptable audio quality. This compression can be lossless, meaning that no audio data is lost, or lossy, meaning that some audio data is discarded.

When the digital audio file is played back, the binary numbers are converted back into an analogue electrical signal by a digital-to-analogue converter, which can then be amplified and played through a speaker or headphones to produce sound waves in the air.

Audio file formats

Audio can be stored in files using different formats, depending on the application and the user’s requirements. Some of the most common formats used for storing audio in files include:

- MP3: This compressed audio format is widely used for music playback and streaming. It offers high-quality audio with relatively small file sizes, making it a popular choice for storing and sharing music files.

- WAV: This uncompressed audio format provides high-quality audio with no loss of fidelity. It is commonly used for recording and editing audio files, as well as for creating audio CDs.

- AAC: This compressed audio format is similar to MP3 but offers better sound quality at lower bitrates. It is commonly used for streaming audio and video content.

- FLAC: This lossless compressed audio format provides high-quality audio with no loss of fidelity. It is commonly used for storing and sharing high-resolution audio files.

- OGG: This compressed audio format is commonly used for streaming audio and video content, and it offers high-quality audio with relatively small file sizes.

- AIFF: This uncompressed audio format provides high-quality audio with no loss of fidelity. It is commonly used for recording and editing audio files on Apple computers.

The choice of format depends on factors such as the audio quality, the file size, and the compatibility with the playback device or software.

Python libraries for audio processing

There are several Python libraries for audio processing, each with its features and capabilities. Here are some of the most popular and widely used libraries for audio processing in Python:

- NumPy is a fundamental library in Python for numerical computing. It provides the ability to perform various numerical operations on arrays, such as filtering, resampling, and FFT (Fast Fourier Transform).

- SciPy is built on top of NumPy and provides additional scientific and technical computing functionalities, including digital signal processing (DSP), Fourier analysis, and filter design.

- Librosa is a library for analysing and processing audio signals. It includes functionality for feature extraction, beat tracking, pitch estimation, and more.

- Pydub is a simple and easy-to-use library for working with audio files in Python. It allows you to load, manipulate, and save various audio file formats, including MP3, WAV, and AIFF.

- Soundfile is a library for reading and writing sound files. It supports various file formats, such as WAV, FLAC, and OGG, and provides a simple and straightforward interface for working with audio data.

- PyAudio provides a Python interface to the PortAudio library, a cross-platform library for audio input and output. It allows you to record and playback audio in real-time and supports various input and output devices.

- FFMpeg: FFMpeg is a command-line tool for manipulating video and audio files. Several Python bindings for FFMpeg, including moviepy and ffmpeg-python, provide a simple and easy-to-use interface for working with FFMpeg from Python.

Overall, selecting the best library for audio processing depends on the specific use case and the project’s requirements.

In this post, I focus on using Librosa, providing a great starting point for audio processing in Python. I will also use wave, sounddevice, soundfile, wave and, of course, NumPy!

I am affiliated with and recommend the following fantastic books for learning Python and mastering your audio processing and digital music programming skills.

Installing required libraries

First, you’ll need to install a few libraries to work with audio files in Python. Besides librosa, there are a few useful libraries for audio processing, such as NumPy and SciPy (check the scipy.signal). You can install them using pip. We can also use the sounddevice library 4 to play our sound, soundfile to save our audio files. Additionally, we can use the wave module from the Python standard library, which provides an interface to work with WAV files.

pip install librosa pip install numpy pip install soundfile pip install sounddevice

As usual, importing the required libraries beforehand we start coding.

import librosa

import numpy as np

import soundfile as sf

import wave

import sounddevice as sd

Working with WAV files

WAV for audio storage

WAV files have the extension .wav and can be played on most media players, including Windows Media Player, iTunes, and VLC Media Player. WAV is a standard file format for storing high-quality audio and is supported by many devices and audio applications. WAV files are uncompressed, keeping the raw audio data without losing quality. This results in large file sizes but ensures the audio quality is preserved.

WAV files are often used in professional audio applications such as recording studios and sound production, where high-quality audio is required. The WAV format is flexible and supports various audio formats, including mono and stereo, 8-bit and 16-bit, and different sample rates. This makes WAV files popular for audio storage, especially for high-quality audio applications.

Recording voice

Sure, here’s an example Python code to record voice using the sounddevice library and save it as a WAV file using the wave library: Please note that you can also use pyaudio, a popular library for recording and playing audio.

import sounddevice as sd

# Set the sampling frequency and duration of the recording

sampling_frequency = 44100

duration = 5 # in seconds

# Record audio

print("Recording...")

audio = sd.rec(int(sampling_frequency * duration), samplerate=sampling_frequency, channels=1)

sd.wait() # Wait until recording is finished

print("Finished recording")

The sample rate is the number of samples or times the audio signal is measured per second.

The sample rate determines the precision and accuracy of the audio signal representation. A higher sample rate means the audio signal is sampled more frequently, resulting in a more detailed and accurate representation. On the other hand, a lower sample rate leads to a lower precision and accuracy representation of the audio signal.

Standard sample rates include 44.1 kHz, 48 kHz, and 96 kHz. The most commonly used sample rate for music is 44.1 kHz, used in CDs and considered a standard for high-quality audio.

It’s important to note that changing the sample rate of an audio signal will affect its sound. Increasing the sample rate will result in a higher-quality sound and a larger file size. Decreasing the sample rate will result in a lower-quality sound and a smaller file size.

Saving an audio file

To save our recording, we can use the soundfile’s write function as follows.

import soundfile as sf

# Save the recorded audio to a WAV file

sf.write('voice.wav', audio, sampling_frequency)

This code will record 5 seconds of audio using the default microphone, save it as a WAV file with a sample rate of 44.1 kHz and 16-bit depth, and print the name of the saved file to the console. You can adjust the duration variable to change the length of the recording and the file_name variable to change the name of the saved file.

Playing an audio file

To play an audio in Python, we can use the sounddevice library:

sd.play(audio, fs)

sd.wait()

In this example, we use the play() function to play the signal array at the specified framerate, and then we use wait() to wait until the sound is finished playing.

Loading WAV files

To load a WAV file, we can use the “wave” module:

with wave.open('voice.wav', 'rb') as wav_file:

channels_number, sample_width, framerate, frames_number, compression_type, compression_name = wav_file.getparams()

frames = wav_file.readframes(frames_number)

audio_signal = np.frombuffer(frames, dtype='<i2')

channels_number, sample_width, framerate, frames_number, compression_type, compression_name

(1, 2, 44100, 220500, 'NONE', 'not compressed')

In this example, we open the audio.wav file in read-only mode (‘rb’), and then we extract some metadata from the file using the getparams() method. We then read all the audio frames into a bytes object and convert them to a NumPy array with the frombuffer() method, specifying the data type as <i2 (16-bit signed integers).

If you prefer using Jupyter notebooks or Google Colab, you can also play the audio files using the Audio function in the IPython.display.

from IPython.display import Audio

Audio(audio_signal, rate=sampling_frequency)

Librosa use cases

Librosa is a Python library for analysing audio signals and provides functions for loading, transforming, and manipulating audio signals. The library has a simple, easy-to-use interface and supports various audio file formats, such as .wav and .mp3.

Beforehand, we can download some sound files to be loaded and analysed with librosa.

There are plenty of sound file resources online. In my further tests, I use the soundtracks recorded by LoopMaiden and available in the following resources.

- Sacrifice (mp3) https://freesound.org/people/LoopMaiden/sounds/567852/

- Drums (mp3) https://freesound.org/people/LoopMaiden/sounds/565186/

I use wget to download the sound files locally when working in Jupyter notebooks.

# Getting the sacrifice sound file

!wget https://cdn.freesound.org/previews/567/567852_12708796-lq.mp3

# Getting the drums' sound file

!wget https://cdn.freesound.org/previews/565/565186_12708796-lq.mp3

Next, we use the sacrifice_file and drums_file variable names for storing the corresponding file names.

# Keep the file names for further use

sacrifice_file = "567852_12708796-lq.mp3"

drums_file = "565186_12708796-lq.mp3.1"

Loading an audio file

To load an audio file using Librosa, you can use the librosa.load function. This function takes the file path as an argument and returns the audio signal and sample rate.

# load the audio signal and its sample rate

sacrifice_signal, sample_rate = librosa.load(sacrifice_file)

The sacrifice_file is pointing to an MP3 file. To load an MP3 file with librosa, you can simply use the librosa.load() function and specify the path to the MP3 file.

This is so easy since Librosa uses the audioread library to read audio files, which supports various audio formats, including WAV, MP3, FLAC, OGG, AIFF, and more. Loading an audio file with librosa will automatically use the appropriate backend from audioread to decode the file.

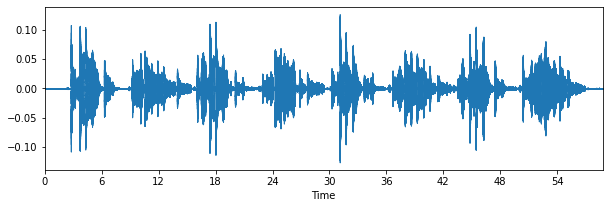

Plotting the signal

With the librosa.display, we can display the signal amplitude of the song in time.

import librosa.display

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 3))

librosa.display.waveshow(sacrifice_signal, sr=sample_rate) # use waveplot should waveshow be unavailable

plt.show()

Spectral features

Spectral features are a set of audio features that capture the spectral content of an audio signal, including information about its frequency and power distribution. They represent the audio signal in the frequency domain, which provides information about the different frequency components present in the signal.

Spectral features help capture information about the timbre and texture of sounds and energy distribution across different frequency bands.

Various spectral features provide a different representation of the spectral content of the audio signal, with some emphasising various aspects of the signal, such as its harmonic or percussive content. Librosa provides easy-to-use functions for computing spectral features. It offers multiple options for spectral feature extraction, including the mel-spectrogram and its coefficients, Chroma features.

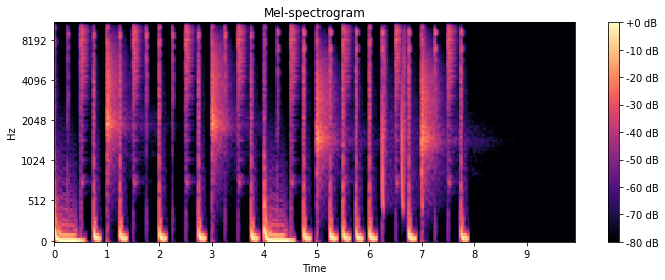

The mel-spectrogram

The mel-spectrogram represents an audio signal that maps the power of its spectral content onto the mel scale, a perceptual scale of pitches. The mel-spectrogram is computed by first transforming the audio signal into the frequency domain, then applying a mel-scale filterbank to the power spectrum, and finally taking the logarithm of the resulting energy values.

The mel-spectrogram is widely used in audio and music analysis, such as sound classification, genre recognition, and content-based music retrieval. It helps capture information about the timbre and texture of sounds and energy distribution across different frequency bands.

Using the mel scale in the mel-spectrogram has several benefits over the raw power spectrum. The mel scale is based on the perception of the pitch by the human ear and considers that the ear is more sensitive to some frequencies than others. By mapping the power spectrum onto the mel scale, the mel-spectrogram provides a more meaningful representation of the spectral content of the audio signal, which is closer to how we perceive sound.

Mel-spectrograms are widely used in various audio and music analysis tasks, including:

- Sound classification: Mel-spectrograms classify sounds into different categories, such as speech, music, and noise. They provide a compact representation of the spectral content of the audio signal that can be used as input to machine learning algorithms for classification.

- Genre recognition: Mel-spectrograms are used to recognise the genre of music, such as rock, pop, classical, and hip-hop. They provide a compact representation of the spectral content of the audio signal that can be used to capture the unique characteristics of different music genres.

- Content-based music retrieval: Mel-spectrograms are used to retrieve music based on its content, such as the melody, rhythm, or timbre. They provide a compact representation of the spectral content of the audio signal that can be used to compare the similarity between different music pieces.

- Music transcription: Mel-spectrograms are used in music transcription systems that transcribe music into symbolic representations, such as sheet music or MIDI files. They provide a compact representation of the spectral content of the audio signal that can be used as input to machine learning algorithms for transcription.

- Music synthesis: Mel-spectrograms can be used in music synthesis systems, which aim to synthesise new music based on a given input. They provide a compact representation of the spectral content of the audio signal that can be used as input to machine learning algorithms for synthesis.

These are just a few examples of the many applications of mel-spectrograms in audio and music analysis. They are widely used due to their ability to capture the spectral content of the audio signal in a more meaningful and compact way, which makes them a powerful tool for various audio and music analysis tasks.

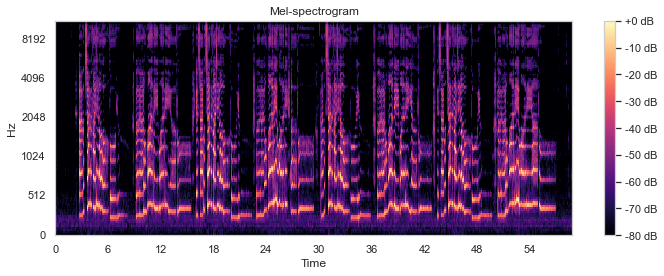

To extract spectral features, we can use the librosa.feature.melspectrogram and plot the computed the mel-spectrogram with the librosa.display.specshow.

import librosa.display

import matplotlib.pyplot as plt

# Load the recorded file

signal, sr = librosa.load(sacrifice_file)

# Compute the mel-spectrogram

mel_spectrogram = librosa.feature.melspectrogram(y=signal, sr=sr)

# Plot the mel-spectrogram

plt.figure(figsize=(10, 4))

librosa.display.specshow(librosa.power_to_db(mel_spectrogram, ref=np.max), sr=sr, hop_length=512, y_axis="mel", x_axis="time")

plt.colorbar(format="%+2.0f dB")

plt.title("Mel-spectrogram")

plt.tight_layout()

plt.show()

mel_spectrogram

array([[8.6759080e-08, 8.1862680e-08, 2.1721085e-08, ..., 9.7081141e-08,

9.0149932e-08, 2.5203136e-07],

[2.5509055e-07, 4.5715746e-07, 5.5796284e-07, ..., 4.6257932e-07,

7.5741809e-07, 6.4309518e-07],

[4.5354949e-07, 6.1497201e-07, 1.5107657e-06, ..., 2.4389235e-06,

4.7857011e-06, 2.3308990e-06],

...,

[1.6104870e-09, 3.4708965e-09, 3.7480508e-09, ..., 1.1005507e-09,

1.7327233e-09, 3.0494558e-09],

[1.8408958e-10, 4.1122933e-10, 2.1915571e-10, ..., 3.3125191e-10,

3.7715914e-10, 5.4983756e-10],

[2.2381045e-12, 2.8185846e-12, 2.0125798e-12, ..., 4.5505917e-12,

6.7639215e-12, 9.8445193e-12]], dtype=float32)

We have converted the mel-spectrogram to logarithmic power with the help of power_to_db() function.

Let’s see and compare the mel-spectogram of the drums’ sound file.

# Load the recorded file

drums_signal, sr = librosa.load(drums_file)

# Compute the mel-spectrogram

mel_spectrogram = librosa.feature.melspectrogram(y=drums_signal, sr=sr)

# Plot the mel-spectrogram

plt.figure(figsize=(10, 4))

librosa.display.specshow(librosa.power_to_db(mel_spectrogram, ref=np.max), sr=sr, hop_length=512, y_axis="mel", x_axis="time")

plt.colorbar(format="%+2.0f dB")

plt.title("Mel-spectrogram")

plt.tight_layout()

plt.show()

Mel-Frequency Cepstral Coefficients

What is cepstral? The term “cepstral” comes from the “ cepstrum “ mathematical transformation. The cepstrum is a type of Fourier transform used to analyse signals in the frequency domain, but with the added step of taking the logarithm of the magnitude of the Fourier coefficients. The result is a new set of coefficients in the cepstral domain that provide a different signal representation.

The cepstral representation is often used in signal processing because it can help separate different sources of variation in the signal. For example, in speech processing, the cepstral representation can separate the vocal tract characteristics of a speaker from the fundamental frequency of their voice.

“Mel-Frequency Cepstral Coefficients” (MFCCs) refers to a type of cepstral analysis commonly used in speech and music processing. In MFCCs, the frequency bands are arranged according to the Mel scale, a perceptual scale of pitch based on human hearing, rather than a linear frequency scale. The resulting cepstral coefficients are then used as features for various classification and analysis tasks.

Mel-Frequency Cepstral Coefficients (MFCCs) are commonly used for music classification tasks. Here are a few examples:

- Genre classification: MFCCs can extract features from music recordings to classify them into different genres. For instance, a classifier can be trained on features extracted from songs from rock, pop, jazz, and classical genres. The classifier can then predict the genre of a new song based on its extracted MFCCs.

- Mood classification: MFCCs can be used to extract features that can help in predicting the mood of a music recording. For instance, a classifier can be trained on features extracted from songs of different moods, such as happy, sad, or calm. The classifier can then predict the mood of a new song based on its extracted MFCCs.

- Instrument recognition: MFCCs can extract features that can help identify the musical instruments played in a recording. For instance, a classifier can be trained on features extracted from recordings of different instruments such as guitar, piano, or violin. The classifier can then predict the instrument played in a new recording based on its extracted MFCCs.

- Singer identification: MFCCs can be used to extract features that can help identify a song’s singer. For instance, a classifier can be trained on a set of features extracted from recordings of different singers, and the classifier can then predict the singer of a new song based on its extracted MFCCs.

These are just a few examples of music classification tasks where MFCCs are commonly used. Other tasks where MFCCs are used include speech recognition, speaker recognition, and audio event detection.

Mel-Frequency Cepstral Coefficients (MFCCs) can be computed with the librosa.feature.mfcc.

# extract MFCCs

mfccs = librosa.feature.mfcc(y=sacrifice_signal, sr=sample_rate)

mfccs

array([[-718.0983 , -714.62036 , -714.26794 , ..., -701.2012 ,

-706.69806 , -710.34985 ],

[ 13.860107 , 18.418316 , 18.285805 , ..., 34.14977 ,

24.460789 , 21.746822 ],

[ 13.0396805 , 16.68719 , 15.259602 , ..., 24.350578 ,

20.935247 , 20.20428 ],

...,

[ -6.753648 , -6.3677974 , -3.5676217 , ..., -0.77700734,

-2.8421237 , -5.1242743 ],

[ -6.150442 , -5.963284 , -2.7150192 , ..., -0.83888555,

-3.8434372 , -4.993577 ],

[ -5.4489803 , -5.6858215 , -2.7151508 , ..., -3.6655302 ,

-6.600809 , -5.61689 ]], dtype=float32)

We do the following to compute the Mel-spectrogram and extract Mel-frequency cepstral coefficients (MFCCs).

# Compute the mel-spectrogram

mel_spectrogram = librosa.feature.melspectrogram(y=signal, sr=sr)

# Compute the Mel-frequency cepstral coefficients (MFCCs)

mfccs = librosa.feature.mfcc(S=librosa.power_to_db(mel_spectrogram), sr=sr)

These code examples demonstrate the basic usage of the mel-spectrogram computation functions in librosa, which can be easily modified and extended for different audio and music analysis tasks.

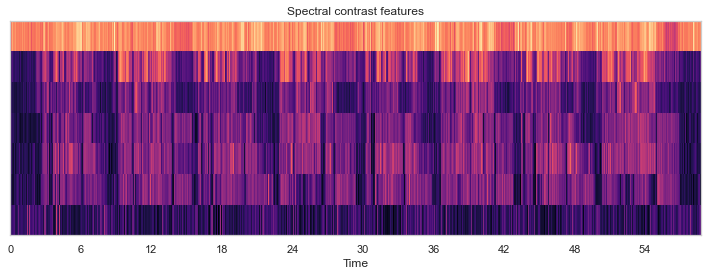

Spectral contrast

In audio signal processing, spectral contrast is a feature that measures the difference in magnitudes between adjacent frequency bands in a power spectrum. It is commonly used to capture the perceived “brightness” or “spectral shape” of an audio signal.

In Python’s librosa library, the spectral_contrast function computes the spectral contrast of an audio signal using the following steps:

- Divide the audio signal’s frequency spectrum into multiple frequency bands (or “sub-bands”), typically using a logarithmic scale.

- Compute the mean magnitude of the frequency spectrum within each sub-band.

- Compute the standard deviation of the magnitudes across all sub-bands.

- Compute the spectral contrast by taking the ratio of the difference between the maximum and minimum sub-band magnitudes to the standard deviation.

The resulting feature is a vector summarising the relative magnitudes of adjacent frequency bands in the audio signal. A higher spectral contrast indicates a more significant difference in magnitudes between adjacent frequency bands, associated with a “brighter” or more “sharp” spectral shape. Conversely, a lower spectral contrast indicates a more uniform distribution of magnitudes across adjacent frequency bands, associated with a “duller” or more “mellow” spectral shape.

# Compute the spectral contrast features

spectral_contrast = librosa.feature.spectral_contrast(y=sacrifice_signal, n_fft=2048, hop_length=512)

# Plot the spectral contrast features

plt.figure(figsize=(10, 4))

librosa.display.specshow(spectral_contrast, sr=sample_rate, hop_length=512, x_axis="time")

plt.title("Spectral contrast features")

plt.tight_layout()

plt.show()

Spectral contrast measures the relative difference between the magnitudes of adjacent frequency bands in an audio signal. It can be used in machine learning applications for audio classification, speaker identification, and speech recognition tasks.

Here are some steps to use spectral contrast in machine learning applications:

Feature extraction: Spectral contrast can be computed from the frequency spectrum of an audio signal. The signal can be processed using a Fourier transform to convert it into the frequency domain. Then the spectral contrast can be computed by taking the difference between the mean magnitudes of adjacent frequency bands. Preprocessing: Before using spectral contrast as a feature in machine learning applications, it is often helpful to preprocess the data to remove noise, filter out unwanted frequencies, and normalise the signal. Training: The spectral contrast features can be used as input to a machine learning algorithm, which can learn to recognise patterns in the data and make predictions based on those patterns. The algorithm can be trained using a labelled dataset, where each audio sample is labelled with its corresponding class (e.g., music, speech, noise). Testing: Once the machine learning algorithm has been trained, it can be tested on a new dataset to evaluate its performance. The performance can be measured using accuracy, precision, recall, and F1-score metrics.

Some specific examples of using spectral contrast in machine learning applications include:

Speaker identification: Spectral contrast can be used to extract features from speech signals, which can be used to identify individual speakers. Music genre classification: Spectral contrast can be used to extract features from music signals, which can be used to classify the music into different genres (e.g., rock, pop, classical). Environmental sound classification: Spectral contrast can be used to extract features from audio signals in the environment (e.g., bird songs, car horns, sirens), which can be used to classify the sounds into different categories.

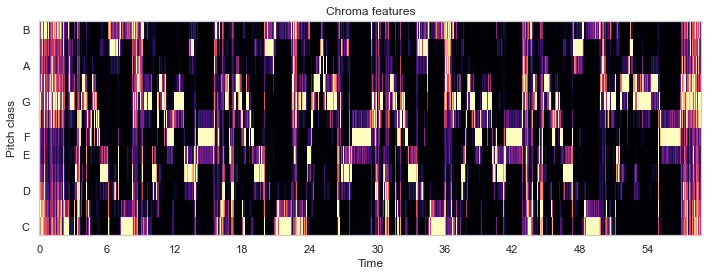

Chroma Features

Chroma features are audio features that capture music’s harmonic and melodic structure. They represent audio signals more abstractly and musically, meaningfully compared to raw audio samples.

Chroma features are derived from the chromagram, which is a 12-dimensional representation of an audio signal, where each dimension represents one of the 12 pitch classes (C, C#, D, D#, E, F, F#, G, G#, A, A#, B). The chromagram is computed by transforming the audio signal into the frequency domain and calculating the energy at each of the 12 pitch classes.

Chroma features are widely used in music information retrieval (MIR) and machine learning tasks, such as music classification, genre recognition, and cover song identification. They help capture music’s harmonic and melodic content, which is often more important for these tasks than the raw audio signal.

Librosa provides chroma representations such as the chromagram and chroma derivatives like the chroma-covariance and chroma-correlation. Here is a Python code example of using chroma features with the librosa library:

# Compute the chroma features

chroma_features = librosa.feature.chroma_stft(y=sacrifice_signal, sr=sample_rate)

# Plot the chroma features

plt.figure(figsize=(10, 4))

librosa.display.specshow(chroma_features, sr=sample_rate, hop_length=512, x_axis="time", y_axis="chroma")

plt.title("Chroma features")

plt.tight_layout()

plt.show()

The computed chroma features are plotted using the specshow function from librosa.display, which displays the features as spectrograms. The chroma features capture the harmonic content of the audio signal and provide a compact representation that can be used for various audio and music analysis tasks and in music retrieval.

This code example demonstrates the primary usage of the chroma feature computation function in Librosa, which computes the chroma features from the waveform using the short-time Fourier transform (STFT).

The Fourier transform is a mathematical tool for representing signals in the frequency domain. It provides a way to transform a time-domain signal into a frequency-domain representation, which can reveal important characteristics of the signal, such as its frequency content, harmonic structure, and power distribution.

The Fourier transform can be applied to a windowed segment of the sound signal, resulting in the short-time Fourier transform (STFT), which provides a time-frequency representation of the signal. The inverse Fourier transform can convert the frequency-domain representation back into a time-domain signal.

The Fourier transform is a cornerstone of many signal-processing techniques and is widely used in various fields, including audio and music processing, telecommunications, image processing, and scientific computing.

Effects

Librosa library can also be used to create audio effects such as pitch adjustment and audio stretch in time, which we learn in this section.

Pitch shift

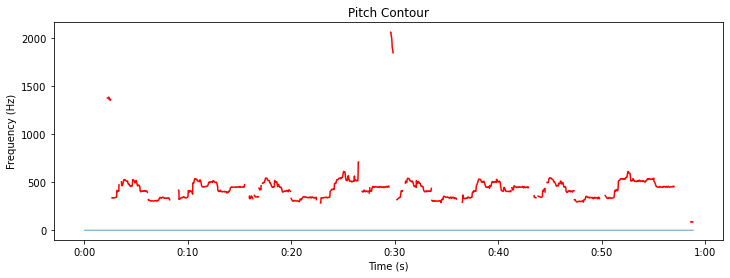

Pitch is an essential aspect of music, as it allows us to distinguish between musical notes and recognise melodies. In music, the pitch is typically measured in hertz (Hz), which is the number of vibrations per second. The standard tuning frequency for the A note in Western music is 440 Hz, which serves as a reference for tuning other notes.

Pitch perception can vary between individuals and can be affected by age, hearing loss, and musical training. Some people have a perfect pitch, which means they can identify or produce specific musical notes without any external reference.

Here is an example of plotting the pitch of a WAV file using the librosa library in Python. We employ the librosa.pyin function, which takes an audio time series as input and returns an estimate of the fundamental frequency at each time frame, along with other pitch-related features such as pitch confidence and voicing probability.

# Load an audio file

y, sr = librosa.load(sacrifice_file)

# Compute pitch using the PEPLOs algorithm

f0, voiced_flag, voiced_probs = librosa.pyin(y, fmin=librosa.note_to_hz('C2'), fmax=librosa.note_to_hz('C7'))

# Plot pitch contour

plt.figure(figsize=(12, 4))

librosa.display.waveshow(y, sr=sr, alpha=0.5)

plt.plot(librosa.frames_to_time(range(len(f0))), f0, color='r')

plt.xlabel('Time (s)')

plt.ylabel('Frequency (Hz)')

plt.title('Pitch Contour')

plt.show()

In this example, we first load the WAV file using the librosa.load function. Then, we compute the pitch using the algorithm called “Probabilistic YIN” or “PYIN” for short implemented in librosa.pyin. Finally, we plot the pitch contour on top of the waveform using librosa.display.waveshow and matplotlib.pyplot.plot. The resulting plot shows the pitch of the audio signal over time.

Pitch shift is the process of altering the pitch of an audio signal, which can be either increasing or decreasing its frequency without affecting its duration. There are several reasons one might need to do a pitch shift, including adapting to an individual singer’s or musician’s vocal range, harmonising a lead vocal or instrumental track with other tracks in a recording, and creating special effects, amongst other applications.

With librosa.effects.pitch_shift, we can shift the pitch defined using n_steps parameter for increasing or decreasing (when negative) the pitch in semitones. We shifted the sound pitch down by two semitones because we set n_steps with the negative value of -2.0.

# Shift the pitch down by two semitones

pitch_shifted_waveform = librosa.effects.pitch_shift(y=y, sr=sr, n_steps=-2.0)

Finally, we use the librosa.util.normalize function to normalize the output signal and save it to a new WAV file using the soundfile.write function from the soundfile library.

# Normalize the output signal

pitch_shifted = librosa.util.normalize(pitch_shifted_waveform)

# Save the output signal to a WAV file

sf.write("voice_lower.wav", pitch_shifted, sr)

Next, we can easily play the sound in Jupyter notebooks using:

Audio(pitch_shifted, rate=sr)

Note that the librosa.effects.pitch_shift function uses a phase vocoder to shift the signal’s pitch, which can introduce some artefacts and affect the quality of the output. There are other pitch-shifting techniques and algorithms available in librosa and other audio processing libraries that you can experiment with to achieve different effects.

Now, let’s have a fun and create a “helium” voice by shifting the pitch by five semitones.

# Shift the pitch up by five semitones

pitch_shifted_helium_voice = librosa.effects.pitch_shift(y=y, sr=sr, n_steps=5.0)

Audio(pitch_shifted_helium_voice, rate=sr)

Time stretch

We can also stretch our sound signal in time. Let’s stretch the “helium” voice.

# Stretch the time by a factor of 2

time_stretched_waveform = librosa.effects.time_stretch(pitch_shifted_waveform, rate=2)

Audio(time_stretched_waveform, rate=sr)

Beats generation

Besides pitch adjustment, time stretch and beats generation coded below, librosa can provide much more advanced sound processing capabilities. I recommend trying out some examples in their API documentation.

# Set the parameters for the WAV file

duration = 5.0 # seconds

frequency = 440.0 # Hz (A440)

# Generate the audio data for the WAV file

num_samples = 5000

data = librosa.tone(frequency, sr=22050, length=num_samples)

Audio(data, rate=22050)

All code is tested in Jupyter notebook and available in my GitHub repository

Do you know that with mubert, you can instantly produce custom tracks that flawlessly complement your content across various platforms, such as YouTube, TikTok, podcasts, and videos?

Sometimes, it is needed to mix sound files and speech. You can try using ElevenLabs.io for fantastic natural voices. I am affiliated with them, and I love their text-to-speech engine. ElevenLabs.io is very helpful for creating voiceovers for podcasts and videos.

Conclusion

That’s a quick overview of audio processing in Python using WAV files. You can do much more with audio processing, but this should give you a good starting point. I have also introduced the spectral features and briefly explained the Fourier transform, allowing us to extract and analyse features from raw audio data that can be used to train and improve machine learning models. I will create a Machine Learning example in one of my next posts. Indeed, it will be in Python!

Did you like this post? Please let me know if you have any comments or suggestions.

These posts might be interesting for youDisclaimer: I have used chatGPT while preparing this post. This is why I have listed the chatGPT in my references section. However, most of the text is rewritten by me, as a human, and spell-checked with Grammarly.

Soundtracks

I thank LoopMaiden for these beautiful soundtracks used in this post:

References

2. New Chat (chatGPT by OpenAI)

4. python-sounddevice, version 0.4.6

6. wave — Read and write WAV files

|

About Elena Elena, a PhD in Computer Science, simplifies AI concepts and helps you use machine learning.

|