Python

AI

Python

Life

Weekly

Apps

Git

chatGPT

Blogging

LLM

TensorFlow

ML

genAI

DL

NLP

GenAI

MAC

Preprocessing

SEO

AI Law

AI News

AI Safety

AI-Dev

CLI

Ethics

Agentic

Conda

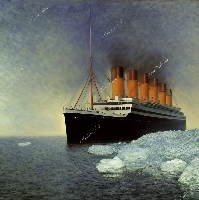

History

News

PhD

Regression

Research

Series

Setup

AI Engineering

AI Regulation

Antigravity

Automation

CNN

Classification

Cursor

Data

Development Tools

Edge AI

Fine Tuning

Food

GitHub

IDE

Kaggle

Mac

Mixed Precision

OS

RS

Robots

Study

Transfer Learning

research